Hello world! The Speckle team spent a lovely week in Tuscany for a company retreat, and par for the course was a 1-day hackathon where many of us met and coded with each other in person for the first time 🙌 (confirmed: no deep fakes among us).

We split into two groups, and Cristi, Reynold, and I (Claire) set up camp under our retreat gazebo to build a machine learning app that generates 3D point clouds from uploaded photos. Our underlying pitch was a simple one: we wanted to test how quickly and easily we could leverage existing ML resources to channel data captured in the real world into geometry you can interact with in desktop applications or on the web, in real time.

The app has the following features:

- Take or select photos through a simple web interface

- Automatically create a Speckle stream containing the generated image point cloud

- Receive a link to the Speckle stream, to view in your browser or for receiving in applications like Rhino

::: tip Give it a test drive

Want to test it out yourself? Our app is currently deployed at 👉ml.speckle.dev👈 While you're at it, take a few seconds to create a Speckle account if you don't have one already!

:::

::: tip Don't miss out!

The other team built this super cool carbon reporting app, read about it 👉here

:::

Process, Scoping, and Challenges

Since the majority of machine learning libraries are trained through PyTorch and other Python packages, it was clear we needed to use the Speckle Python SDK for building out our backend workflow to run an ML model and send the results to a Speckle stream.

We chose to divide the labor into three parts: Cristi researched and implemented a an ML model in python, Reynold designed a point cloud class and figured out how to send it to a Speckle server using Speckle's Python SDK, and I was tasked with building a simple Vue.js app so users could upload their images for point cloud generation.

Although this was relatively new development territory for each of us, each piece fell into place without much trouble. Reynold found Speckle's Python SDK to be straightforward to implement, and readily followed the documentation on creating a new Base class and pushing results to a Speckle stream. Cristi had the most difficulty with navigating Python package dependency and a slow internet connection when downloading the pre-trained model, but managed to start generating preliminary results once set up was complete. As for me, having previously followed Speckle's tutorial for building a simple Vue.js app, I was able to get the frontend up and running in just two hours as well. We spent most of our time debugging, trying to link up the three pieces, and experimenting with and refining the ML results!

The Technical Stuff aka DIY

For those curious to build their own Speckle ML app, we used the following tech:

- Vue.js for our frontend web app

- A pre-trained machine learning model, trained on the

NYU Depth Dataset V2for interior photos. The code is based on a paper titledHigh Quality Monocular Depth Estimation via Transfer Learningby Ibraheem Alhasim and Peter Wonka (2018). - Speckle's Python SDK for creating our point clouds and sending them to a Speckle stream.

Our final hackathon code can be found in the Speckle Systems GitHub repo: https://github.com/specklesystems/SpeckleHackathon-ImgToPointCloud. ⚠ obligatory messy hackathon code warning ⚠

The Stack

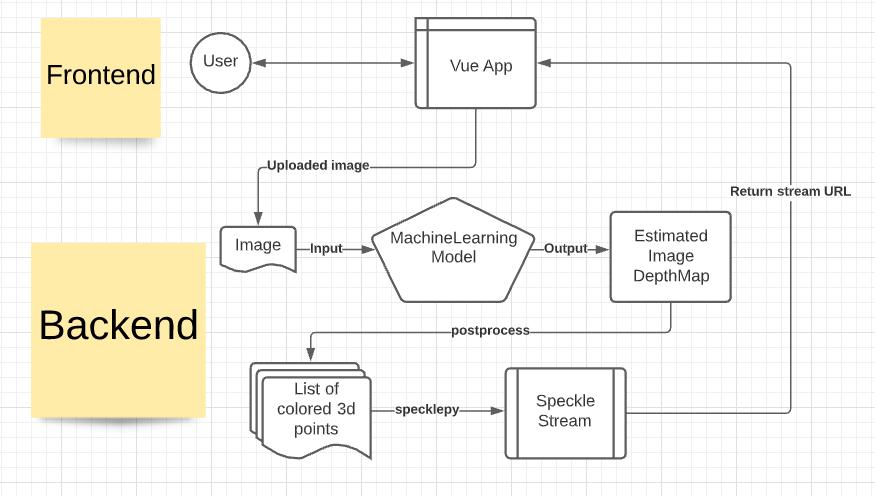

Our user sees a very simple frontend web app that prompts them to upload a photo and then displays a link to their point cloud once the photo has been processed.

In the background, we feed the photo into the ML model, which then returns a depth map of the image. The depth map and pixel image is interpreted into a set of XYZ points with color values, and we do a lil' bit of post processing by adding extra points between any with a large difference in depth to fill in the gaps. These points are used to create a Speckle point cloud class object and sent to a newly generated Speckle stream. A link to this stream is then displayed on the web app, and once you have the stream you can receive the point cloud natively in any Speckle integrated desktop application 🙂

Build it yourself!

- Clone the hackathon github repo

- Download the

NYU Depth V2pretrained model, as described in the repoREADME - Create a docker image with all the requirements using

make buildin theserverdirectory - Edit the

Makefileand set up the target Speckle server and a personal access token to use for uploading (you can do this in your account settings on speckle.xyz!) - Run locally with

make run - After the model loads, open the app at

http://localhost:8080

Takeaways and Next Steps

Although there are many products and platforms for 3D data capture in the real world - think point cloud scanners for high res depth imaging, AR/VR APIs for mobile apps, or expensive and time intensive cloud processors for generating meshes from photos - these workflows often require many proprietary software and hardware links that raise the barrier to build for people in the AEC+ industries.

The ease with which we were able to mobilize current machine learning resources to rapidly prototype an application that generates geometry from real-world input demonstrates how Speckle, as a data platform, can really liberate the flow of information through a constantly expanding field of input/outputs. Our results are limited by the sophistication of the underlying ML model, but serve as a proof of concept for integrating more and more robust AI technologies into AEC. If you're interested in hacking with us or developing this app into something useful, reach out on our community forum!