Ambient Occlusion inside the Speckle Viewer

The Speckle viewer features two distinct ambient occlusion implementations for dynamic and stationary scenarios. The dynamic scenario applies whenever the camera or any object is moving, while the stationary applies whenever all movement has stopped. i.e. the camera and anything else in the scene is standing still. The implementations for both scenarios share some concepts; for example, they’re both computed in screen space but are distinct. We’ll go over each, with emphasis on the stationary ambient occlusion.

::: tip

First, check out how the final result looks like in the model below. To get the feel, try zooming & rotating it and then stopping to see the difference between the progressive and dynamic ambient occlusion modes.

:::

Dynamic Ambient Occlusion

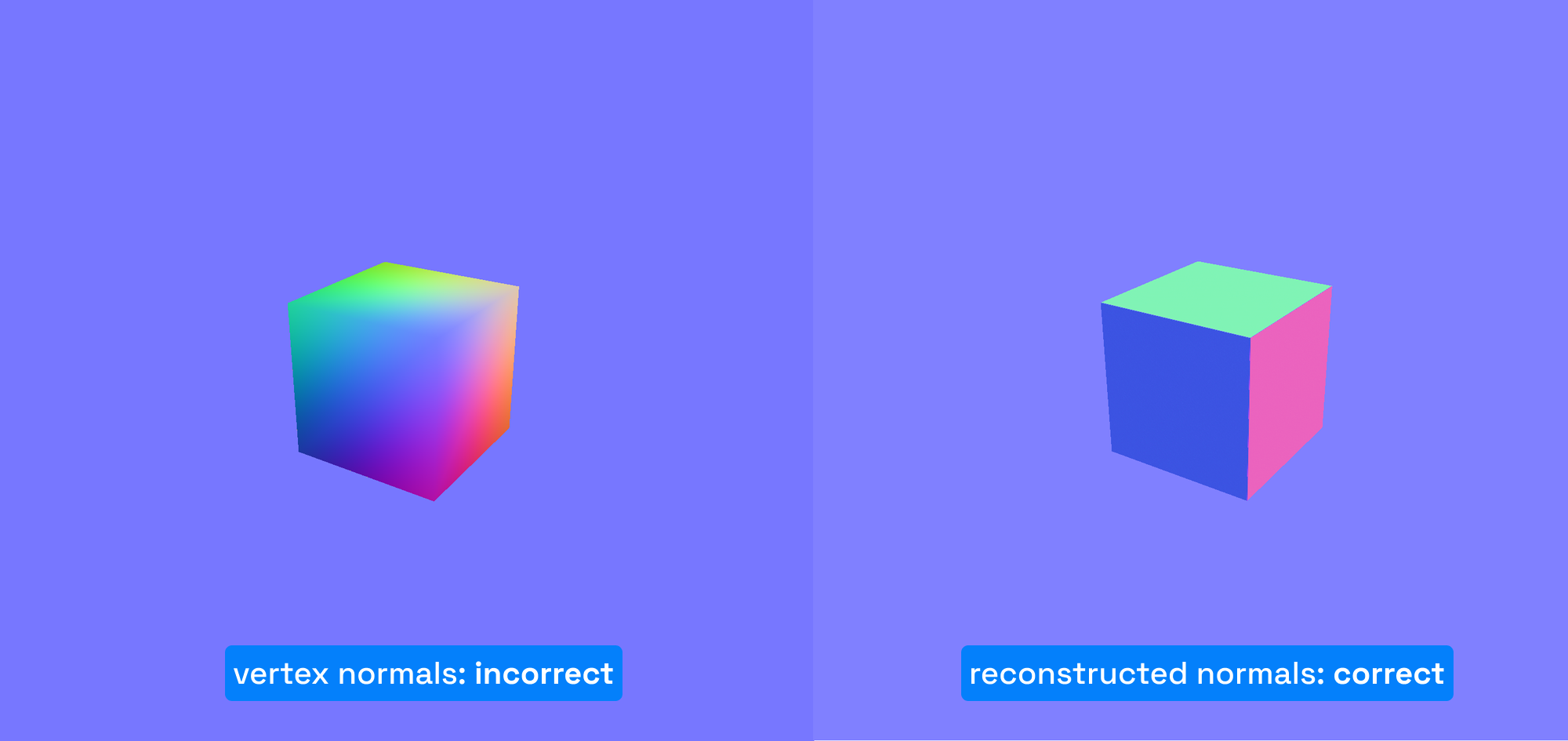

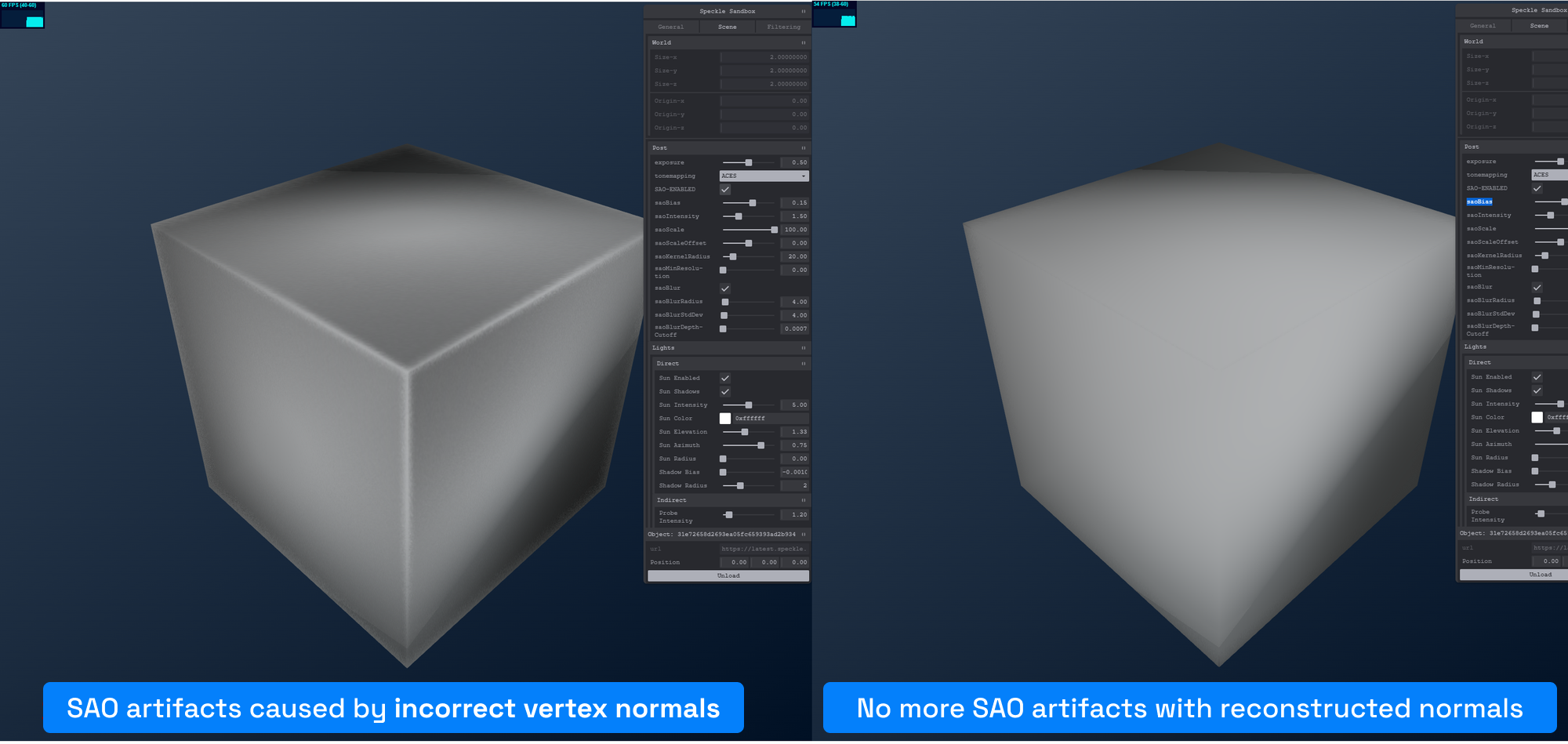

Our viewer’s dynamic ambient occlusion implementation is close to ThreeJS’s SAO implementation, which is based on Alchemy AO, but its estimator is slightly different. One difference from the stock ThreeJS SAO implementation is how we generate our normals texture. Initially, ThreeJS uses another pass to generate the normals. However, that does not fit so well with our typical Speckle streams. We need to churn a lot of geometry, and simply re-passing it all through the GPU pipeline proved too slow for our liking. That’s why we chose to reconstruct the view space normals on the fly from depth. We started with this idea for implementation but then moved to a better approach described here. We still have both implementations under conditional compilation macros. Another advantage of reconstructing the normals is fixing AO artefacts caused by incorrect vertex normals.

After experimenting with reconstructed normals, the conclusion was that it’s faster than rendering the vertex normals in a separate pass for the typical Speckle streams.

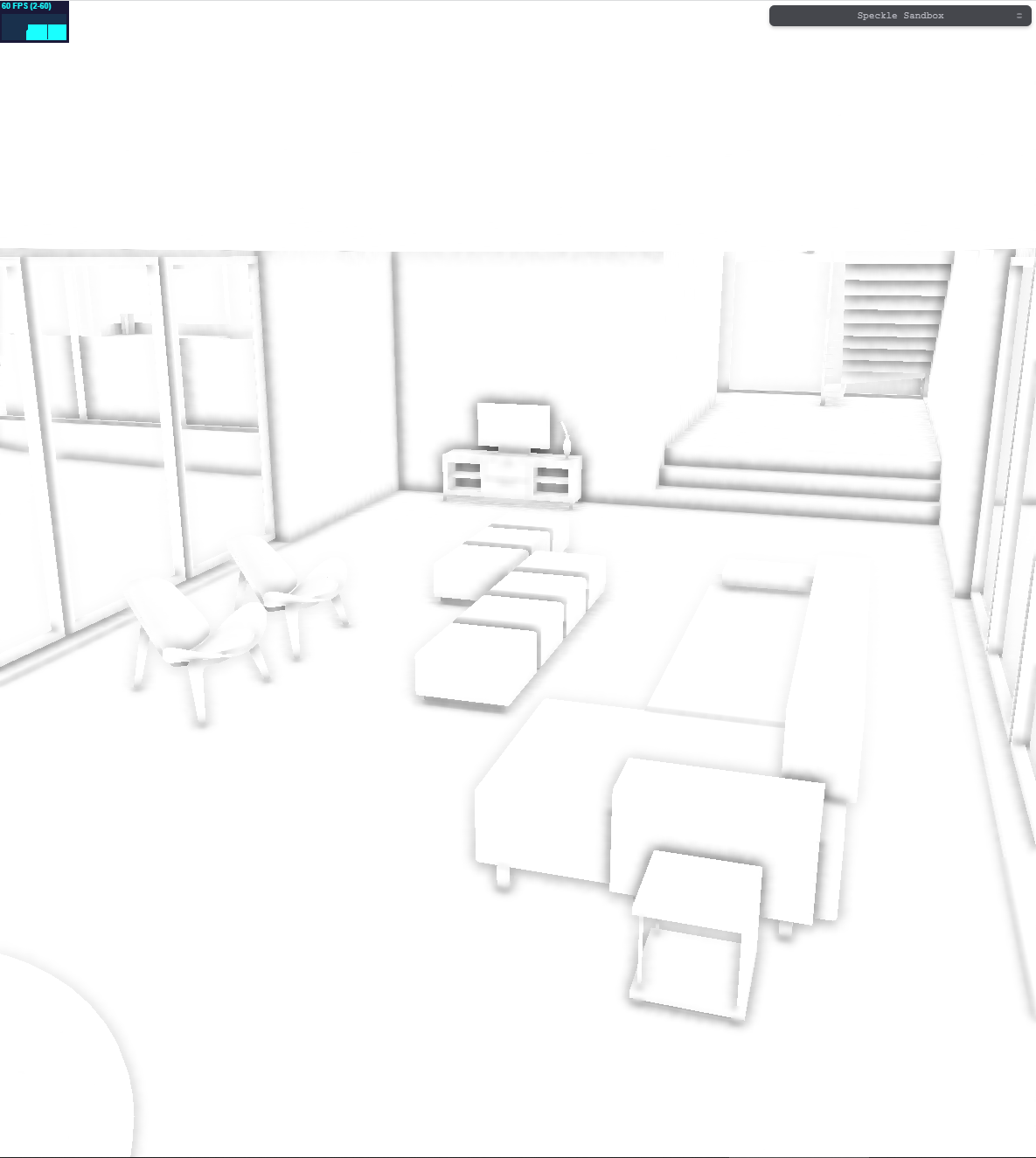

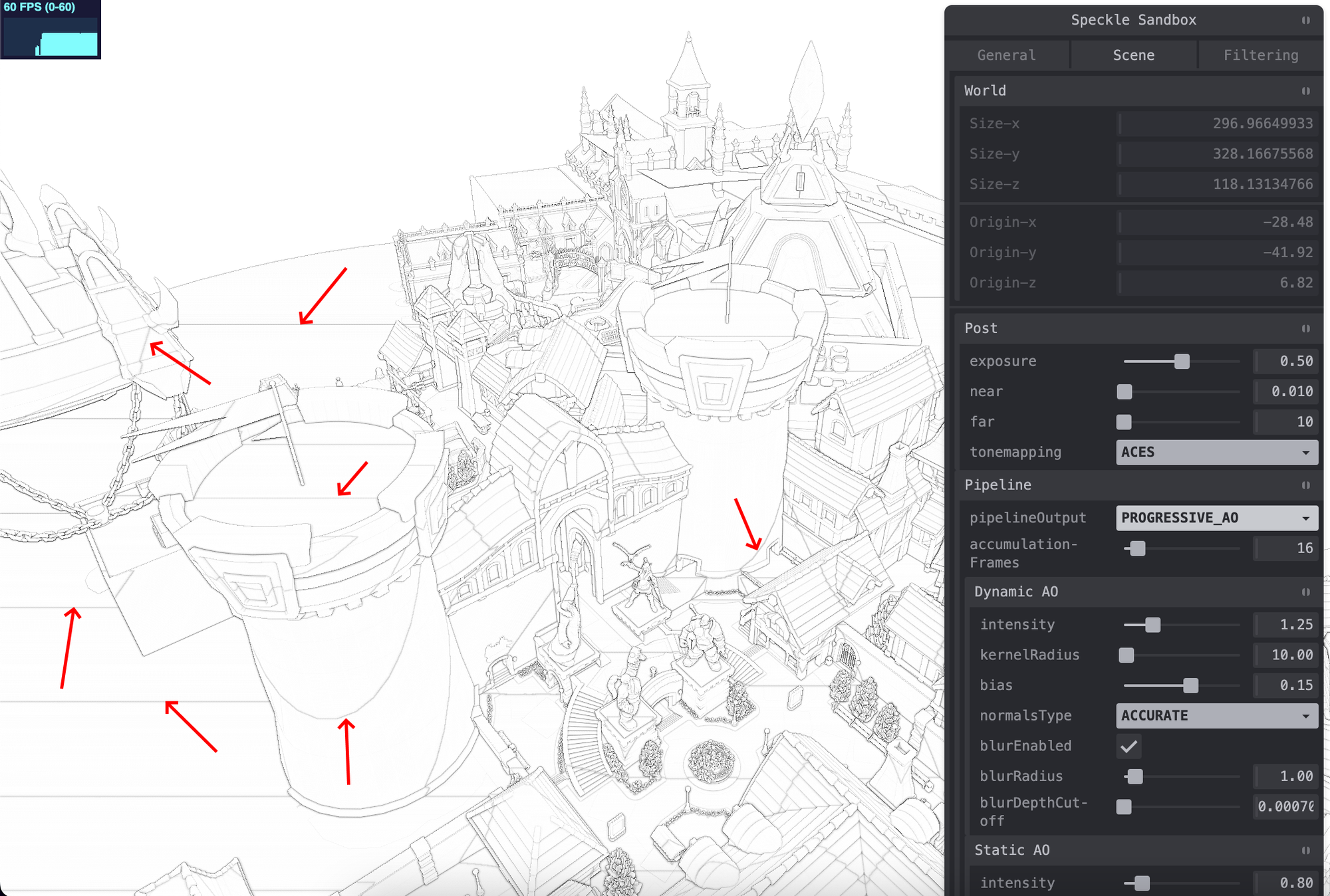

Besides the normal rendering topic, the dynamic ambient occlusion mostly sticks to ThreeJS’s stock implementation, and here’s an example scene with how it looks.

Stationary, Progressive Ambient Occlusion

After adding dynamic AO to our viewer, we wanted to go one step further. SAO was OK, but we wanted the feel of a high-quality, non-noisy, non-blurry ambient occlusion. Typically, one can increase the quality of AO by simply taking more samples and over a larger screen area. This, however, negatively impacts performance severely, up to the point of being impractical.

The issue of performance and quality of any screen space ambient occlusion algorithm is of a spatial nature. You typically want to cover more screen space around each fragment you’re computing AO for, and as that space increases, so do the minimum required samples. This quickly leads to an unreasonable amount of texture fetches needed, which will probably also thrash the texture cache. Because the Speckle viewer needs to run virtually everywhere, we do not have the luxury of ramping up the sample count for our dynamic ambient occlusion.

Could we take this spatial issue and turn it into a temporal one? The idea is relatively simple: Instead of taking more samples in a single frame, we’ll take a decent amount of samples per frame across multiple frames while accumulating the result. We started off with this core concept when implementing our progressive ambient occlusion. Since this was going to be a distinct ambient occlusion implementation, we also wanted to look more closely at how we would compute the actual ambient occlusion value. We chose three candidate algorithms and decided to give them all a try to see which one was better.

- Stock ThreeJS SAO estimator (based on McGuire’s). The same one we’re using for dynamic AO

- The original McGuire estimator

- SSAO. Uniform samples along a hemisphere. Actual depth comparison

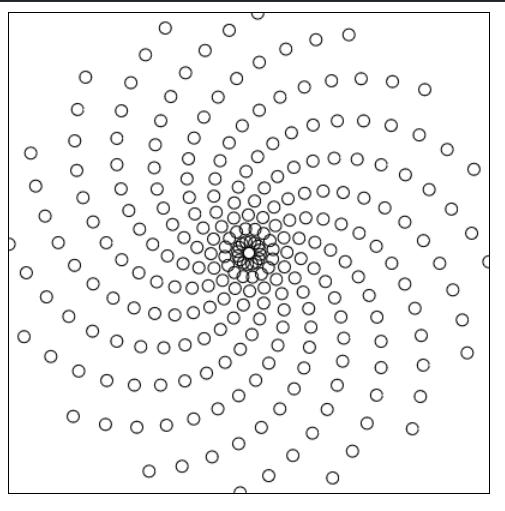

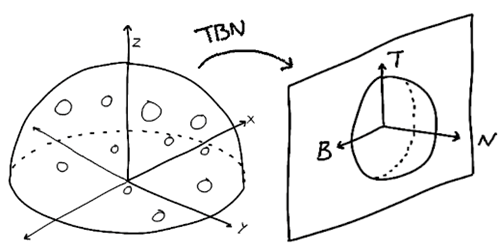

Before we can compare them, we’ll have to find a proper way to generate samples across sequential frames. Here, the fundamental approach might differ since not all three algorithms work the same way regarding how they compute the AO. For the first two, we went along with a spiral-shaped kernel like the one below:

Here is the fiddle which shows it running.

For SSAO, we’re generating n sample vectors along the unit hemisphere, scaled by the kernel size. Samples are randomly generated, which might lead to sub-optimal sampling, but we did this regardless.

Now that we know how to generate our samples for each algorithm, we’ll go through two stages for each frame. First, we’ll generate the ambient occlusion values for the current frame index, then accumulate them in a buffer that holds the previously accumulated values. The Speckle viewer currently generates 16 AO samples per fragment in each frame and accumulates for 16 frames. However, these values are configurable.

We use the samples computed for the current frame in the generation stage to generate the AO values. When generating, we’re not inverting the AO so that the resulting buffer will hold the AO values as white. Each resulting AO value is scaled by the inverse of the kernel size.

In the accumulation stage, we take the generated AO values texture for the current frame and blend it into a texture buffer using the FUNC_REVERSE_SUBTRACT blending equation. This will effectively invert the AO values automatically. The accumulation buffers get cleared to 0xffffff at the beginning of each accumulation cycle, and every time an ao generation gets blended in, it gets divided by the total number of accumulating frames before blending.

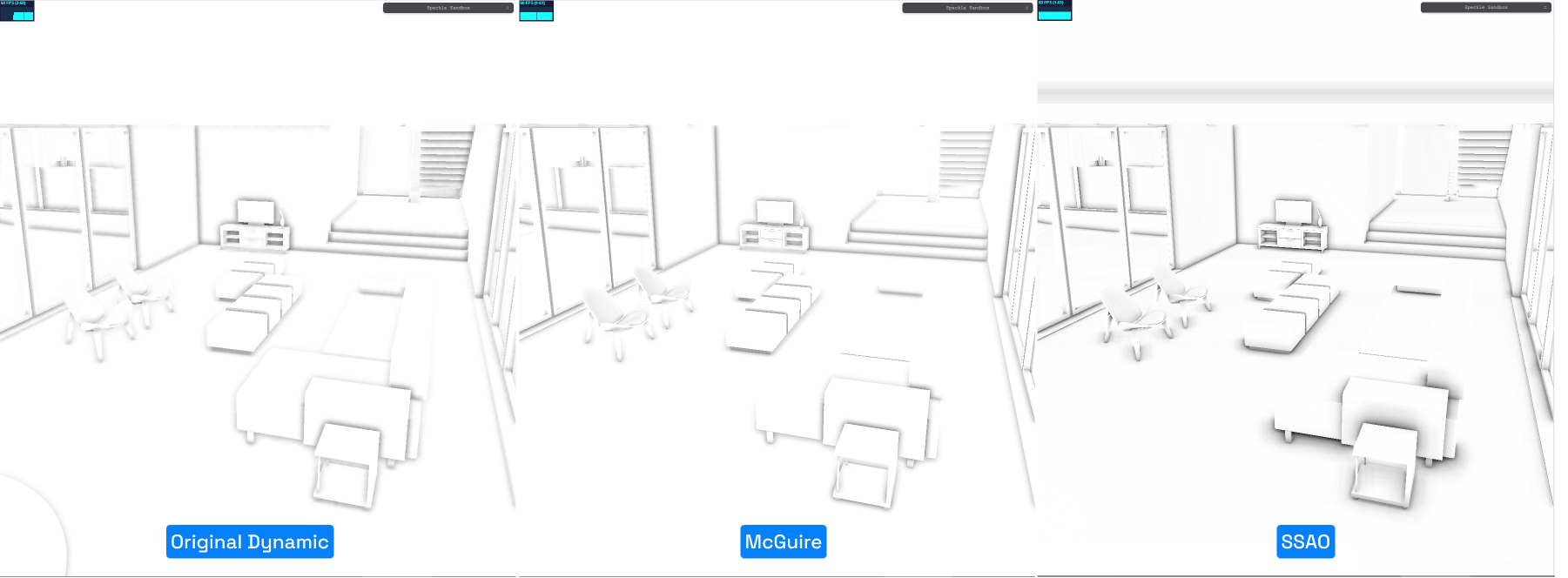

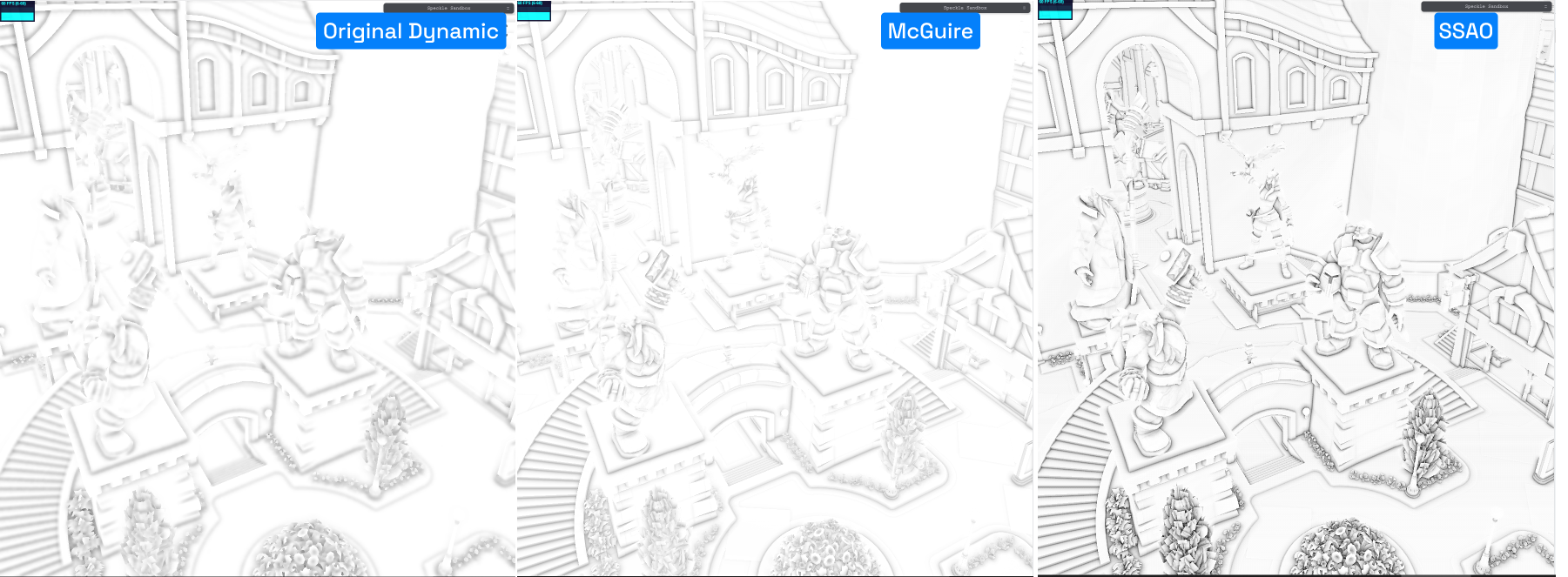

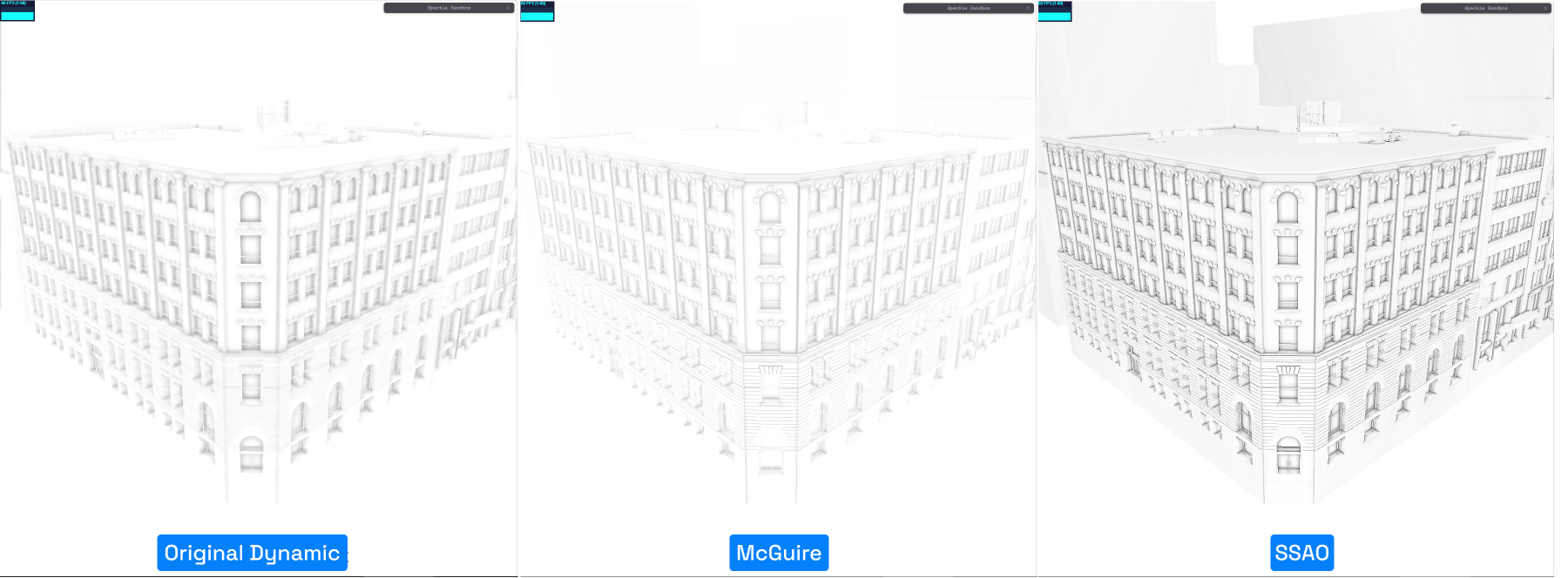

With these in mind, we went along and compared the three algorithms. The first two were very similar, as expected, but the original McGuire estimator performed slightly better. For our comparison results images, we’ll analyze only the last two algorithms, McGuire and SSAO, and compare them with the original dynamic SAO for reference. Here are some example comparisons

First, let’s describe the main difference between McGuire and SSAO. McGuire uses an estimator to determine the AO value for any given pixel, which is only loosely based on its surroundings**.** SSAO tries to solve the AO problem by actually checking depth values in the neighbourhood of all pixels, comparing them, and then determining which, if any, contributes to the centre pixel’s occlusion.

Something else obvious is how similar the dynamic AO looks to the progressive McGuire one, even though the total sample difference between the two is quite significant. This mostly beats the entire purpose of having progressive AO.

To get a better idea of which algorithm yields better results, let’s compare them to Blender’s Eevee ambient occlusion, which also seems to be screen space.

There are notable similarities between the two, which work in our favour and helped us choose the algorithm for our progressive ambient occlusion: SSAO.

Depth Buffer Woes

One issue we encountered while developing our progressive AO was some strange artefacts visible only on macOS:

During the depth pass, we encode the fragment depth float value as the RGBA of a ubyte8 texture attachment. This saves a lot of bandwidth when sampling it, compared to using a float32 attachment. The encoding is a ThreeJS stock implementation, so we weren’t doing anything weird there that could cause the artefacts. Also, no amount of biasing of the depth sample would fix the issue, so we had to find another solution. After several failed attempts, which involved changing the encoding itself or using a hardware depth texture attachment, we settled on changing the actual depth being written in the depth pass. Instead of outputting the perspective depth, we’re outputting linear depth. You can see this here; the artefacts were all gone with this change and a slight bias.

Variable Screen Space Kernel Size

Typical screen space AO implementations have kernel size as a uniform value. This makes sense since typical applications will know the relative dimensions of the scene beforehand so that the kernel size can be “hand-tuned” for best results. With Speckle, we don’t get that luxury either. We can have scenes hundreds of kilometres long/wide/high, and under 1 millimetre. And what’s worse, we can have both at the same time! This means that having a fixed kernel size will not work.

Moreover, the SSAO implementation we originally used specifies kernel size in world space. To illustrate the problem better, imagine we load up a stream of a building with typical dimensions, say in the range of a few tens of meters. For this stream, you’d think: “Huh, well, I guess a kernel size of 0.5m would do just great”. And it does. But as soon as you load another stream with much smaller or much larger dimensions (for any reason), you’d get visible inconsistencies. If the second stream’s size is 0.5m in total, you will get this washed-out AO which does not preserve any details. Another issue with world space kernel size becomes visible for different scene projections. For example, when the camera is zoomed out a lot, and your entire scene becomes smaller and smaller on the screen, that world space radius will also become too small from a relative point of view, producing odd-looking results.

The solution we employed here was first to switch from a world-space to a screen-space kernel size, and instead of having it at a predetermined fixed value, we computed it on the fly in the shader based on the fragment’s view depth. To do that, we used this reference, but we had to derive the inverse of the function described there. Here is the final method for computing the kernel size for both camera projections.

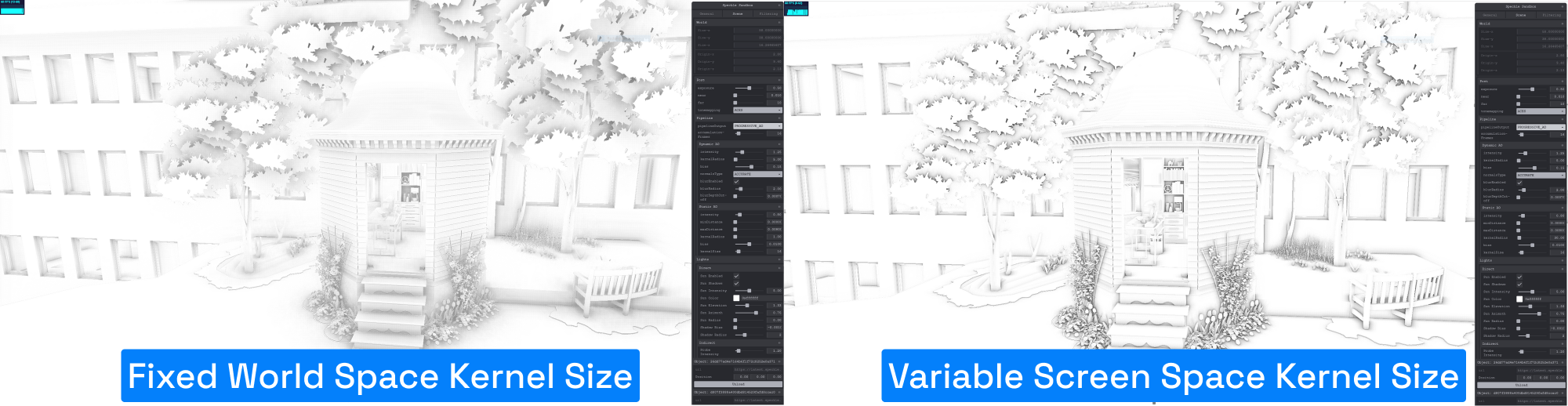

Here is an example which shows a smaller-scale stream alongside a larger-scale stream:

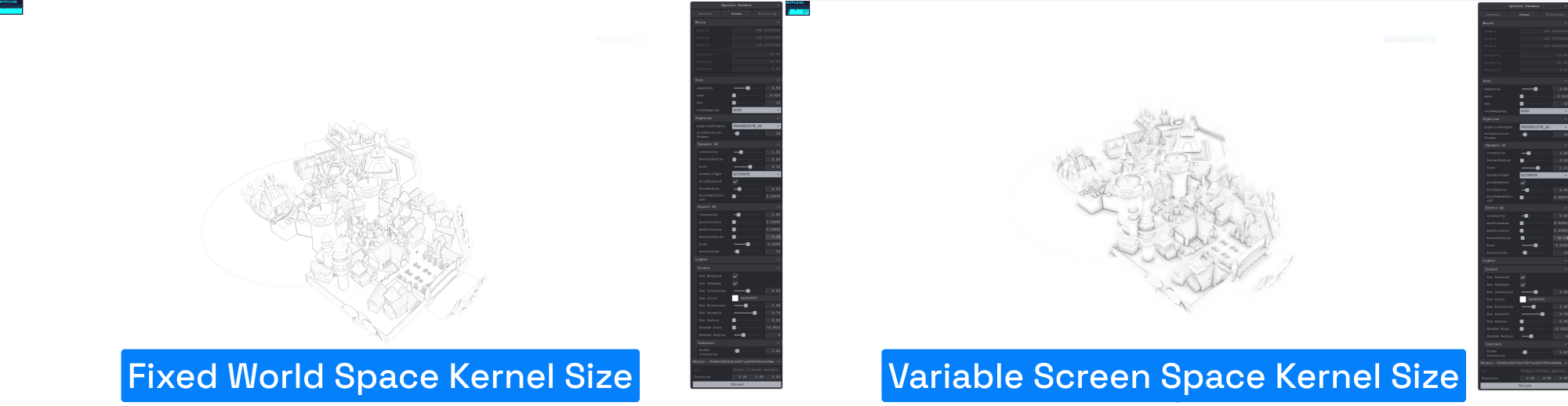

Here is an example which shows the issues visible when zooming out :

Future Development

Currently, our progressive AO generation stage is using a simple, if not primitive, approach to generating ambient occlusion, which, even if it does its job quite well for what is worth, we may want to look into more advanced and modern AO generation algorithms. If not, let’s spend some time improving how we generate samples for our kernel. Biasing the samples could yield better results with fewer samples. However, finding the right way to bias the samples meaningfully might be difficult.

Additionally, we could look into accelerating AO generation in general, dynamic or progressive, using a hierarchical depth buffer. This, however, cannot be implemented in its true form on universally functioning WebGL at this point in time. Even so, there are partial solutions that might speed things up.

References

- https://casual-effects.com/research/McGuire2011AlchemyAO/VV11AlchemyAO.pdf

- https://wickedengine.net/2019/09/22/improved-normal-reconstruction-from-depth/

- https://atyuwen.github.io/posts/normal-reconstruction/

- https://www.researchgate.net/publication/316999882_Progressive_High-Quality_Rendering_for_Interactive_Information_Cartography_using_WebGL

- https://stackoverflow.com/questions/3717226/radius-of-projected-sphere

- https://mtnphil.wordpress.com/2013/06/26/know-your-ssao-artifacts/