This performance blog post is a perfect example of how our Community supports our growth: Jonathon helped us formulate a better viewer experience by notifying us of an issue with our current RTE.

::: tip Check out Part 1 of this post to learn more about our RTE implementation.

:::

Problem

Here’s the thread, and here’s the embedded stream that show’s the issue:

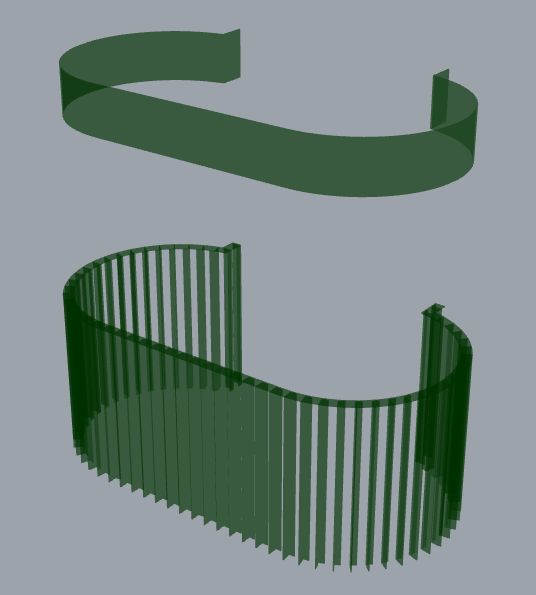

The actual geometry should look nice and smooth like this :

Something is definitely wrong here. We checked the stats of the stream to see if by any chance the geometry is extremely tiny as well as being far away from the world origin. Turns out, the geometry is as normal as it could get. The required precision is in the range of centimeters as one would expect for something like this. Looking into the issue further, made us believe that it is again a precision issue, but since the required precision was not that large, something wasn’t adding up.

Solution

In the previous post about RTE we mentioned briefly the idea that when we split a double into two floats, there are multiple ways of doing it and it all boils down to the tradeoff that you want/need between range and accuracy. If you need to work with very large numbers, you need good range. This will impact the accuracy/precision of those numbers. The reciprocal is valid as well. If you require high accuracy/precision you will need to deal with limited range.

We need to step back a bit and look at our current RTE implementation based off this article where they split double values like so

double doubleHigh = floor(doubleValue / 65536.0) * 65536.0;

floatHigh = (float)doubleHigh;

floatLow = (float)(doubleValue - doubleHigh);

According to the author this translates into :

::: tip A double is encoded into two floats, a high and low. In the low float, 7 of the 23 fraction bits are used for the part of the double after the decimal point, which means that the resolution of the number is 2^7, 0.0078125. This is less than a cm. The remaining 16 bits are used to represent an integer value from 0 to 65535 (216 - 1) in 1 increments.

:::

::: tip The high part uses all 23 bits to represent the numbers 65,536 to 549,755,748,352 ((2^23 - 1) * 65536) in 65536 increments.

:::

They use this method of encoding doubles in two floats for rendering entire solar systems. In their particular case their encoding needs to support a very large range of numbers. Accuracy is less important. At some point the author says that the maximum error for a whole encoded vector is 1.353cm. Component wise, the error is allegedly less than 1cm.

Now, ~1cm accuracy for the scenario where you render entire solar systems is quite insane to be honest, however for our particular case, we would like our double → 2 floats encoding to have higher precision, even if at the cost of a lower range.

Let’s see if we can encode doubles in a way that offers more accuracy. Continuing from where we left off in the previous article, let’s take a look at this approach. There are two distinct things that are happening differently in this implementation:

- The RTE computations inside the vertex program use emulated double precision subtraction ported from the DSFUN90 library written by David H. Bailey originally for FORTRAN. We tried finding an original reference for it, however all the links pointing to it seem dead. We did find this which seems to have updated versions of some some of his libraries, and also this from NVIDIA.

- The double → 2 floats encoding is different, and is in some ways more simple and easy to understand.

Emulating double precision subtraction would look like this:

vec3 t1 = positionLow - u_cameraEyeLow;

vec3 e = t1 - positionLow;

vec3 t2 = ((-u_cameraEyeLow - e) + (positionLow - (t1 - e))) + positionHigh - u_cameraEyeHigh;

vec3 highDifference = t1 + t2;

vec3 lowDifference = t2 - (highDifference - t1);

This will help with precision since it emulates how double precision math would work, using two pairs of floats instead of single doubles.

We’re mostly interested in the double → 2 floats encoding at this point, so let’s start with that. The encoding we’re trying to introduce is the following:

const high = new Float32Array([doubleValue])[0]

const position_high = high

const position_low = doubleValue - high

It’s that simple! What we’re doing is first we’re casting the the double value to float. This will be our high component. Then, we subtract this float value from the float value still using double floating point arithmetic, and using the result as the low component. Both high and low components will be casted down to floats when sent to the GPU

Diving In!

In order to better understand and visualize the differences between several double encoding approaches we’ll be going pencil and paper. An important thing to note, is that we can’t use any calculator apps to do the actual math. They most likely use double floating point arithmetic which does not apply for us. That’s why, we’ll be using:

- This converter to convert from double to float

- This calculator to do the actual floating point math (remember to switch to binary32)

Let’s consider the following values:

// x component of a vertex position

double vertexValue = 549755748352.988812981948127 // this would be in meters

// x component of the camera position

double eyeValue = 549755748342.556380417602694457 // this would be in meters

// The value we will actually use for RTE rendering

double valueToCompute = 10.432432564345432543 // this would be in meters

We’ll be manually computing the values for a single vector component since it’s enough for the example’s sake. We have a vertex and camera position, both as doubles, and we want to encode them as two pairs of floats, push them to the GPU where we’ll be computing the vertex position relative to the eye. We have a reference value, valueToCompute which would be the ground truth, and the value we need to get as close to as possible, using only floats inside the vertex program.

Let’s see what value we get using the encoding we currently use for our RTE:

double dvertexValue_high = 549755748352

float fvertexValue_high = 549755748352

// conversion error = 0

double dvertexValue_low = 0.988812981948127

float fvertexValue_low = 0.988812983036041259765625

// conversion error = 1.087914259765625E-9

double dcamera_high = 549755682816

float fcamera_high = 549755682816

// conversion error = 0

double dcamera_low = 65526.556380417602694457

float fcamera_low = 65526.5546875

// conversion error = -0.001692917602694457

// Substraction done with single floating point math

float highDiff = 65536

float lowDiff = -65525.56640625

float result = 10.433594

// final result error = 0.001161435654567457

Now let’s see what we get using the new encoding:

double dvertexValue_high = 549755748352.988812981948127

float fvertexValue_high = 549755748352

// conversion error = -0.988812981948127

double dvertexValue_low = 0.988812981948127

float fvertexValue_low = 0.988812983036041259765625

// conversion error = 1.087914259765625E-9

double dcamera_high = 549755748342.556380417602694457

float fcamera_high = 549755748352

// conversion error = 9.443619582397305543

double dcamera_low = -9.443619582397305543

float fcamera_low = -9.44361972808837890625

// conversion error = -1.4569107336325E-7

// Substraction done with single floating point math

float highDiff = 0

float lowDiff = 10.4324335

float result = 10.432433

// final result error = 0.000000435654567457

The new encoding precision error is several orders of magnitude smaller than the old encoding. And this, without using emulated double precision subtraction.

For the sake of the argument, the author of the article on which our old RTE implementation is based off, states:

::: tip While not 1 cm accuracy, this is close, and we could double the accuracy along one dimension if we halve the maximum value.

:::

Let’s give it a go!

// Here we reduced increments from 65536(2^16) to 256(2^8):

// double doubleHigh = Math.Floor(value / 256) * 256;

// high = (float)doubleHigh;

// low = (float)(value - doubleHigh);

double dvertexValue_high = 549755748352

float fvertexValue_high = 549755748352

// conversion error = 0

double dvertexValue_low = 0.988812981948127

float fvertexValue_low = 0.988812983036041259765625

// conversion error = 1.087914259765625E-9

double dcamera_high = 549755748096

float fcamera_high = 549755748352

// conversion error = 256

double dcamera_low = -9.443619582397305543

float fcamera_low = -9.44361972808837890625

// conversion error = -1.4569107336325E-7

// Substraction done with single floating point math

float highDiff = 0

float lowDiff = 10.4324335

float result = 10.432433

// final result error = 0.000000435654567457

We get the same result as with the new encoding! So this is another viable solution for increasing accuracy/precision.

Results

With both the new encoding and the emulated double precision subtraction, we get nice and precise looking geometry:

Takeaways

There is caveat however. The number we’ve tested, is huge. Hundreds of billions of units. If in meters, that would make hundreds of millions of kilometers. The encoding works up to this range, however, at some point, when the numbers get astronomically large, the encoding begins to loose precision hard. We haven’t tested extensively to see what’s the limit with this encoding, however going into the range of tens of trillions made the final error be ~0.56.

Another note to make here is regarding computation of vertex normals. Since we’re using Three.js, their implementation uses the existing position buffer to compute the normals, which is already down casted to float. In large numbers & high precision situations, the normals will also suffer from inaccuracies. The fix is straightforward, we compute the normals ourselves using the original double format vertex positions.

Conclusion

In conclusion, we’ve showed two ways in which we can considerably improve our original RTE implementation as well as fixing the issues originally reported by Jonathon Broughton (many thanks!) on our Community Forum. According to the pencil and paper calculations from this article we should be able to theoretically display geometry with vertex positions in the range of hundreds of millions of kilometers within a precision of under a micron. Since a few examples aren’t really enough in order to generate true certainty, We think that the true general precision we’ll be having is somewhat lower (probably somewhere along the lines of just under a millimeter in the case for extremely large numbers like the ones we’ve tested). Even so, we think that such a range, coupled with the already mentioned precision should suffice for displaying most, if not all, geometries that will get thrown at our Speckle Viewer.

References

- https://help.agi.com/STKComponents/html/BlogPrecisionsPrecisions.htm

- https://github.com/virtualglobebook/OpenGlobe/tree/master/Source/Examples/Chapter05/Jitter/GPURelativeToEyeDSFUN90

- https://prideout.net/emulating-double-precision

- https://blog.cyclemap.link/2011-06-09-glsl-part2-emu/

- http://blog.hvidtfeldts.net/index.php/2012/07/double-precision-in-opengl-and-webgl/

- http://mercury.pr.erau.edu/~siewerts/extra/code/digital-media/CUDA/cuda_work/samples/2_Graphics/Mandelbrot/Mandelbrot_kernel.cuh

- https://www.davidhbailey.com/dhbsoftware/

- https://www.h-schmidt.net/FloatConverter/IEEE754.html

- http://weitz.de/ieee/